TDA for Transformers

An informal survey

Although Topological Data Analysis and Transformer neural networks seem to be unrelated, there is a list of works on the intersection of these two topics. Recently, the Persformer [5] was introduced, a first Transformer architecture that accepts persistence diagrams as input. Authors claim that the Persformer architecture significantly outperforms previous topological neural network architectures on classical synthetic and graph benchmark datasets. Moreover, it satisfies a universal approximation theorem

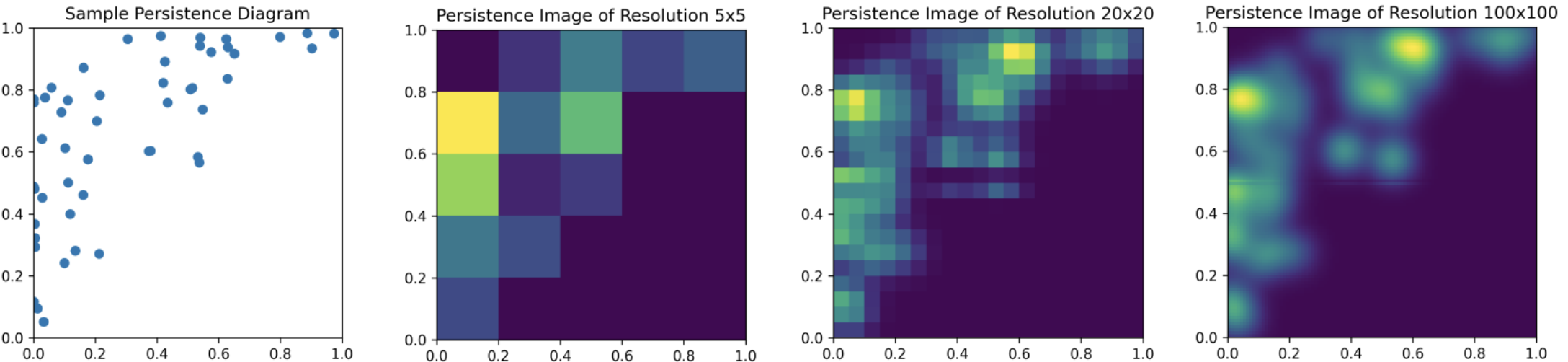

Figure 1: Persistence image of a persistence diagram. From left to right: persistence diagram, persistence images with different resolutions. Image taken from [4].

Also, a number of works was released exploring BERT [2] from a geometric and topological point of view [3, 1, 4]. All of them treat attention maps as adjacency matrices of graphs and calculate topological statistics of them. The [3, 1] used various summary statistics of persistence barcodes to obtain features, while [4] used persistent images. [3] explored how these topological features relate to the naturalness of text, i.e. whether a text was artificially generated or written by human, and [1] explored how they correlate to linguistic phenomena such as grammatical correctness.

References:

- D. Cherniavskii, E. Tulchinskii, V. Mikhailov, I. Proskurina, L. Kushnareva, E. Artemova, S. Barannikov, I. Piontkovskaya, D. Piontkovski, and E. Burnaev. Acceptability judgements via examining the topology of attention maps. arXiv preprint arXiv:2205.09630, 2022.

- J. Devlin, M.-W. Chang, K. Lee, and K. Toutanova. Bert: Pre-training of deep bidirectional transformers for language understanding. arXiv preprint arXiv:1810.04805, 2018.

- L. Kushnareva, D. Cherniavskii, V. Mikhailov, E. Artemova, S. Barannikov, A. Bernstein, I. Piontkovskaya, D. Piontkovski, and E. Burnaev. Artificial text detection via examining the topology of attention maps. In Proceedings of the 2021 Conference on Empirical Methods in Natural Language Processing, pages 635–649, 2021.

- I. Perez and R. Reinauer. The topological bert: Transforming attention into topology for natural language processing. arXiv preprint arXiv:2206.15195, 2022.

- R. Reinauer, M. Caorsi, and N. Berkouk. Persformer: A transformer architecture for topological machine learning. arXiv preprint arXiv:2112.15210, 2021.