Separation of a pair of emotions

Appendix D

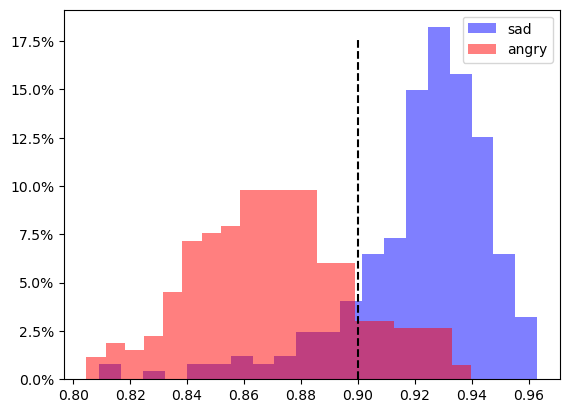

In a similar fashion to our experiments on the separation of individual speakers, we did a ceries of experimetns on separating individual emotions from each other. This is a "restricted" version of the Emotion Recognition task. We performed these studies on data from the IEMOCAP dataset. It turns out that for most pairs (of emotions) there is no head able to separate them "quite well" (in the terminology of Section 4, Restricted tasks). Here we will present results for one pair of emotions — anger/sadness — that can be separated.

|

Hm, sym0 distributions at head 4 from layer 2

Figure D.1 An example of Hm, sym0 distributions for Angry and Sad speech (for this plot we sampled about 500 uterances from each of the two classes). The dashed line is the threshold of optimal classifier |

Even for the optimal classifier (dashed line at Figure D.1) there are some wrongly classified utterances. Below we present some examples of audio samples where this classifier was correct or mistaken.

| Classified as angry... |

Classified as sad... |

||

| correctly |

misclassified |

correctly |

misclassified |